3 ways to improve our climate giving research

What we learned during the 2022 giving season

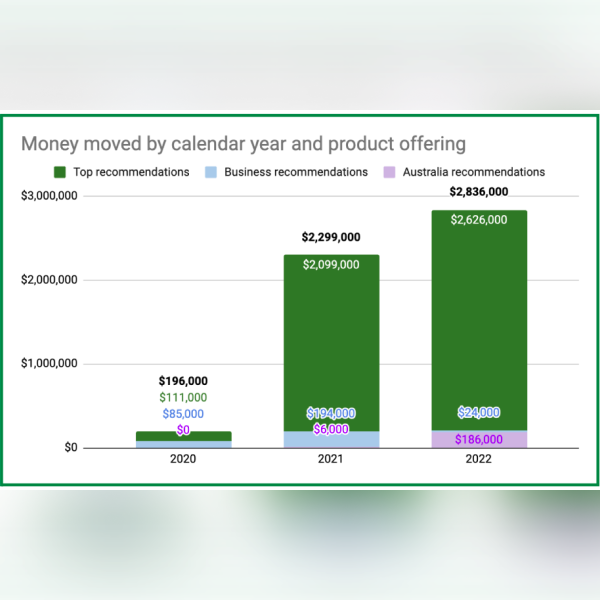

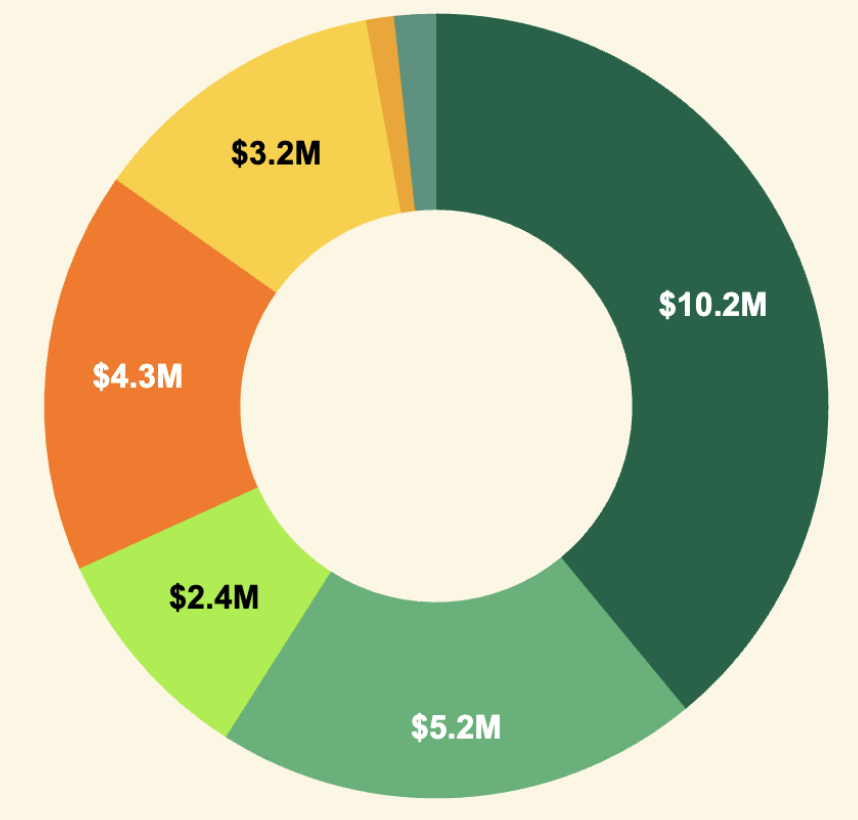

2022 was a year of growth for Giving Green. We doubled our team, added three new top recommendations, and developed a comprehensive strategy for businesses looking to take their climate action to the next level.

But as excited as we are about the positive impact we believe this growth will unlock, we are constantly on the lookout for ways we can improve our research. At the start of 2023, we took a step back to identify ways we can do better. Three review meetings, four spreadsheets, and 19,000 words later, we have a few ideas about ways we want to improve.[1] See below for three examples.

Early-stage prioritization

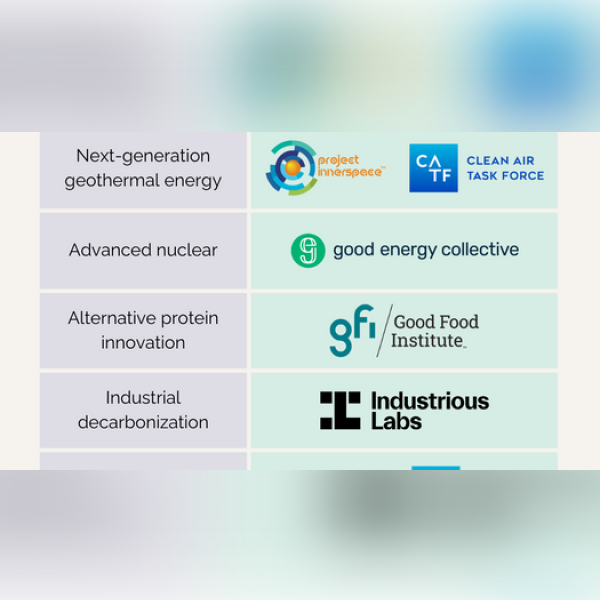

In 2022, we published an overview of the steps we take and the criteria we use to prioritize our research. However, we think our overview was overly simplistic and did not provide enough insight into topics we actually looked into.[2] For example, we think our deep dive on nuclear power made a reasonable case for why support efforts could be highly cost-effective, but did not provide any explanation of why we thought it was more promising than similar geothermal efforts, or whether we even looked into geothermal at all.

To help address this, we are creating a public-facing dashboard that will share more detail on how we prioritize topics and what we have considered. This embodies our values of transparency and collaboration, and we are hopeful it will help others better understand and engage with us on our process.[3]

Cost-effectiveness analyses

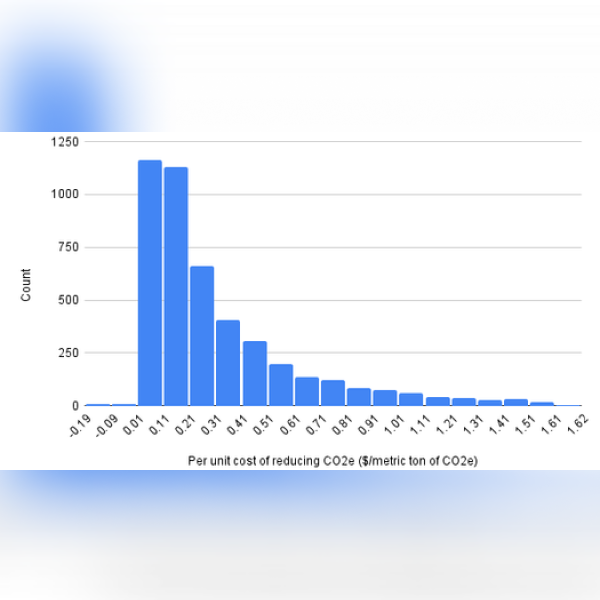

We often use cost-effectiveness analyses (CEAs) as an input into our assessment of cost-effectiveness. However, many of the opportunities we view as most promising also have highly uncertain inputs.[4] Because of this, many of our CEAs primarily served as a way to (a) identify the parameters that affect how much a donation might reduce climate change and (b) assess whether it is plausible that a donation could be highly cost-effective.[5] For example, our Good Food Institute CEA helped us delineate two pathways by which the Good Food Institute might accelerate alternative protein innovation, and estimate that it’s possible a donation is within the range of cost-effectiveness we would consider for a top recommendation.[6]

One of our core values is truth-seeking.[7] A CEA is one of the many tools in our toolbox, but we want to see whether it is possible to make it more useful. We are speaking with academics, researchers, and other organizations to consider ways to reframe our CEAs and/or increase the accuracy of inputs. We also plan to make revisions to how we are communicating about when and how we use CEAs, in order to help readers better understand what we can (and cannot) learn from them.

External feedback

We are a small team that relies heavily on the expertise of others to guide our focus and critique our work. In the second half of 2022, alone, we had around 110 calls with various climate researchers, foundations, and organizations.[8] However, we were not always methodic about when we sought feedback, from whom we sought feedback, and how we weighted that feedback relative to other inputs.

Though we think it is important to remain flexible, we are drafting guidelines to help increase the consistency of our approach to feedback.[9] We also plan to introduce a more formal external review step for our flagship research products.[10] As part of our commitment to our value of humility, we are especially keen to ensure we receive a diversity of feedback and proactively engage with stakeholders who may have different or contrary views to our own.[11]

It is all uphill from here

Identifying issues is much simpler than crafting solutions, but we are excited for what lies ahead and look forward to improving our research to maximize our impact. If you have any questions or comments, we are always open to feedback. Otherwise, stay tuned for more!

Endnotes

[1] Actual meeting count is probably higher, but we include here any research meeting in which we specifically focused on reviewing our 2022 research process and products. Spreadsheets included compilations for content-specific/general issues and content-specific/general improvement ideas. The 19,000 word count is based on the content of the four spreadsheets.

[2] For example, our six-step “funnel” process describes the most formal and methodical way in which we initially seek to identify promising funding opportunities. In practice, we also use other approaches to add opportunities to our pipeline, such as speaking with climate philanthropists or reviewing new academic publications.

[3] See About Giving Green, “Our values” section.

[4] We think this is most likely the case for two main reasons: (1) many climate funders explicitly or implicitly value certainty in their giving decisions, so this means less-certain funding opportunities are relatively underfunded; and (2) we think some of the most promising pathways to scale (e.g., policy influence and technology innovation) are also inherently difficult to assess due to their long and complicated causal paths.

[5] We use rough benchmarks as a way to compare the cost-effectiveness of different giving opportunities. As a loose benchmark for our top recommendations, we use Clean Air Task Force (CATF), a climate policy organization we currently include as one of our top recommendations. We think it may cost CATF around $1 per ton of CO2 equivalent greenhouse gas avoided/removed, and that it serves as a useful benchmark due to its relatively affordable and calculable effects. However, this cost-effectiveness estimate includes subjective guess parameters and should not be taken literally. Instead, we use this benchmark to assess whether a giving opportunity could plausibly be within the range of cost-effectiveness we would consider for a top recommendation. As a heuristic, we consider an opportunity if its estimated cost-effectiveness is within an order of magnitude of $1/tCO2e (i.e., less than $10/tCO2e). For additional information, see our CATF report.

[6] Pathways: see [published] Good Food Institute (GFI) CEA, 2022-09-14, rows 10-14. Top recommendation cost-effectiveness: We use rough benchmarks as a way to compare the cost-effectiveness of different giving opportunities. As a loose benchmark for our top recommendations, we use Clean Air Task Force (CATF), a climate policy organization we currently include as one of our top recommendations. We think it may cost CATF around $1 per ton of CO2 equivalent greenhouse gas avoided/removed, and that it serves as a useful benchmark due to its relatively affordable and calculable effects. However, this cost-effectiveness estimate includes subjective guess parameters and should not be taken literally. Instead, we use this benchmark to assess whether a giving opportunity could plausibly be within the range of cost-effectiveness we would consider for a top recommendation. As a heuristic, we consider an opportunity if its estimated cost-effectiveness is within an order of magnitude of $1/tCO2e (i.e., less than $10/tCO2e). For additional information, see our CATF report.

[7] See About Giving Green, “Our values” section.

[8] Estimate based on counting internal date-stamped call note files.

[9] These guidelines include suggestions for when to seek external feedback, who to ask for external feedback, and the types of questions/feedback we should expect to value from different inputs.

[10] For example, we may have a professor specializing in grid technology review a deep dive report on long-term energy storage. We may also formalize the ways in which we ask Giving Green advisors for input.

[11] See About Giving Green, “Our values” section.

Support Our Work

Giving Green Fund

One fund. Global impact. One hundred percent of your gift supports a portfolio of high-impact climate organizations, vetted by our research.

Best for:

Donors who want the simplest way to impact multiple climate solutions.

Top Climate Nonprofits

Meet the organizations on Giving Green’s list of high-impact nonprofits working to decarbonize our future, identified through our rigorous research.

Best for:

Donors who want to give directly and independently.

Support Our Work

We thoroughly research climate initiatives so you can give with confidence. For every $1 we receive, our work unlocks another $21 for effective climate solutions.

Best for:

Donors who want to amplify their impact through research.

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)